Building an On-Chain Token Analytics Tool – Gemmer Case Study

.webp)

Key Requirements for Building a Token Analytics Tool

- Detecting new tokens and liquidity pairs across multiple blockchains and DEXs in real time.

- Tracking early buyers, categorizing wallets by behavior, and analyzing purchase patterns.

- Aggregating and standardizing data from heterogeneous networks into consistent, structured signals.

- Keeping end-to-end latency low so alerts could be generated within seconds of token creation.

- Scale with high transaction volumes without impacting data consistency or signal accuracy.

System Architecture Overview for On-Chain Token Tracking

The system is built around a set of bots, each following the same core workflow:

- Pair creation monitoring

Each bot tracks the creation of new liquidity pairs in real time across its assigned DEXs and launchpads.

- Purchase aggregation

When a new token appears, the system records all purchases of that token in pairs with the blockchain’s native asset (e.g., SOL, ETH) or stablecoins (USDC, USDT).

- Signal trigger

Once the cumulative number of purchases for a token across all monitored DEXs reaches a predefined threshold, the system generates and dispatches a signal.

- Signal uniqueness

Each token generates only one signal, regardless of how many pairs or DEXs it appears on.

Bot Specification

Bot 1 (Solana): Pump.fun

Bot 2 (Solana): Pumpswap, Raydium, LaunchLab, Meteora, Meteora DBC

Bot 3 (Ethereum): Uniswap v2, Uniswap v3, Uniswap v4

Bot 4 (Base): Uniswap v2, Uniswap v3, Uniswap v4, Aerodrome

Bot 5 (BNB Chain): PancakeSwap v2, PancakeSwap v3, 4meme launchpad

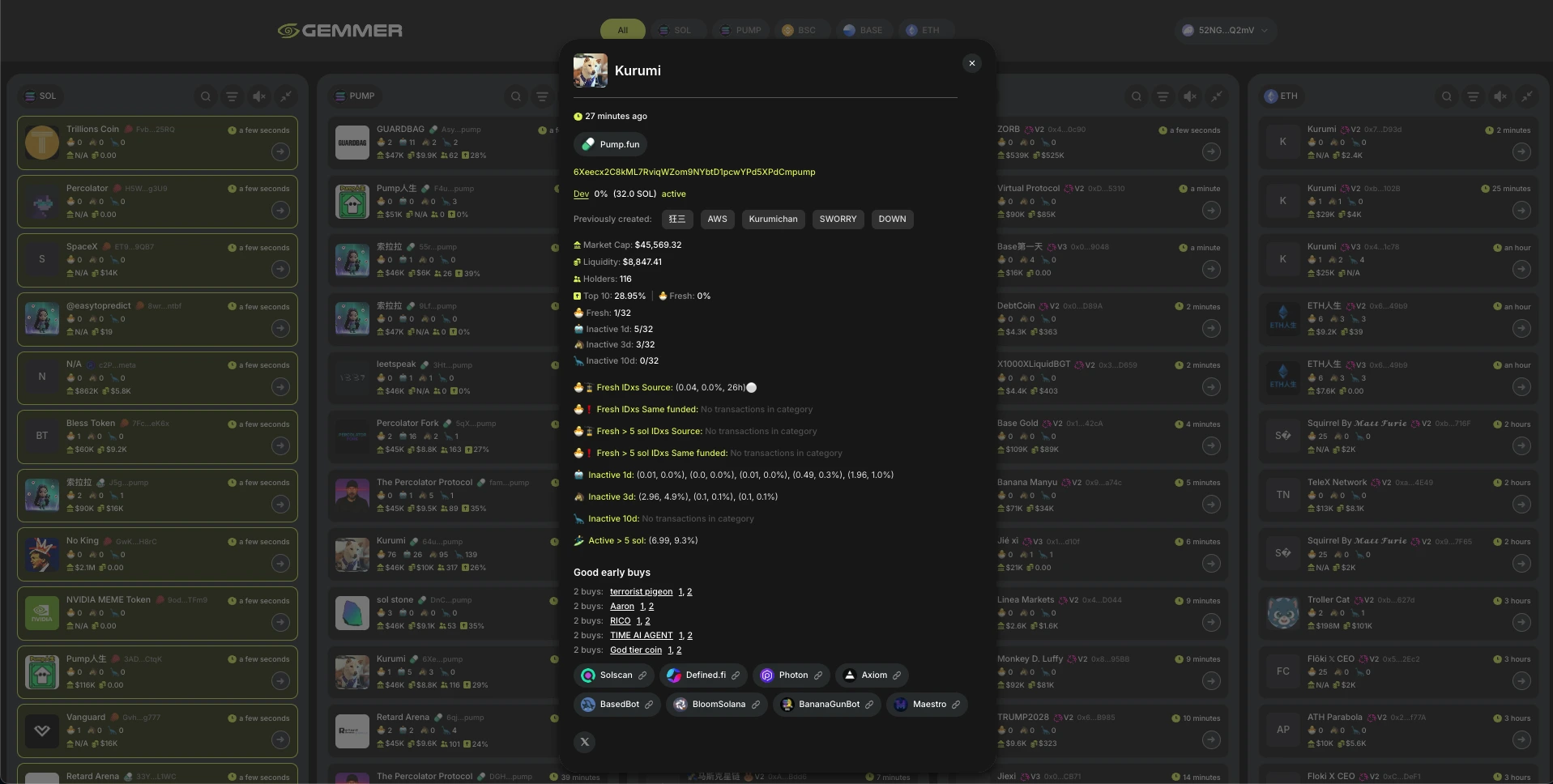

Signal Structure

Each signal is a structured message containing multiple layers of information:

1. Token metadata

- Token address: e.g., CoCkPn1rLVumueRLxtBfr1oGJ4sXcKfBDktPCNAkdA2D

- Market capitalization (MC): e.g., $176,919

- Total liquidity (Liq): e.g., $19,831

- Number of holders: e.g., Holders: 127

- Holder distribution:

- Top 10: 23.2% — percentage of total supply held by the top 10 wallets

- Fresh: 56.1% — percentage held by first-time buyers (“Fresh” wallets)

2. Additional analytics

- Good early buys: tokens previously purchased by two or more of the current token’s buyers, useful for identifying wallets with strong investment patterns

- Links: social and market references, e.g., Twitter, Telegram, website, Dexscreener

3. Creator information (launchpads only)

- Dev: wallet address of the token creator

- Ownership: percentage of total supply held by the creator, e.g., 0.0%

- Native token balance: e.g., (19.8 SOL)

- Activity status: e.g., active

- Previously deployed tokens: list of other tokens created by this address, e.g., (BNBTARD, 404, 404, Henry, Coin)

Buyer Categories and Transaction Details

Buyers are classified based on prior activity and purchase size:

- Fresh IDxs

- Wallets that have never purchased any tokens before (“Fresh”)

- Purchase amount ≤5 SOL (or equivalent in other blockchains)

- Fresh >5 SOL IDxs

- First-time wallets with purchases >5 SOL (or equivalent)

- Inactive 3d IDxs

- Wallets that haven’t made any token purchases in the last 3 days

- Inactive 10d IDxs

- Wallets inactive for the last 10 days

For each transaction in the “Fresh” categories, the system records:

- Purchase amount: in the blockchain’s native asset

- % of total supply bought: share of the token acquired in that transaction

- Wallet top-up timestamp: last time the wallet received funds

- Funding source:

- Unknown: EOA wallet

- Known: CEX / bridge / mixer

- Same Funded: multiple buyers funded from the same wallet (EOA), which may indicate coordinated activity or use of “camps”

This categorization allows the system to distinguish between organic and potentially coordinated early activity, providing a granular view of token adoption dynamics.

Challenges in High-Volume Blockchain Data Ingestion

1. Database performance under high-volume ingestion

Tracking thousands of tokens and millions of transactions across multiple chains creates high-cardinality tables and complex query paths. Without intervention, queries on these tables can become slow, affecting downstream processing.

Solution:

- Batch queries were introduced for grouped operations, and indexes were added to high-traffic fields.

- A background pruning process removes stale data automatically, keeping the working set size manageable.

2. Infrastructure challenges (mainly Solana)

Node reliability

Many Solana node providers couldn’t handle high transaction volumes and lacked complete historical event data.

High block rate and transaction volume

With blocks produced roughly every 2 seconds and a high transaction volume, collecting and processing all events in real time is challenging.

Solutions

- Provider selection

After testing multiple Solana node providers, we chose Quicknode with gRPC streaming. It reliably delivered the full set of historical and real-time events without restrictive limits. - Custom indexer

We built a high-performance indexer to take full control over data ingestion. This allowed us to handle Solana’s high block rate and transaction volume efficiently. - Aggregator service

On top of the indexer, we added an aggregator that processes raw data and serves it in a structured format to downstream consumers (Pump bot, Solana bot). This separation of responsibilities improved both reliability and maintainability.

Architecture Impact and Operational Results

- Early-stage token monitoring: Captures new token launches across multiple blockchains in real time.

- Buyer behavior analysis: Classifies wallets by activity and purchase size, separating first-time buyers from returning or coordinated investors.

- Transaction-level detail: Records purchase amounts, share of total supply, wallet top-up timestamps, and funding sources to provide a granular view of token distribution.

- Scalable data ingestion: Batch queries, targeted indexes, and background pruning maintain performance when processing millions of transactions across high-cardinality tables.

- Blockchain data processing: Custom indexers and aggregator layers handle high block rates and transaction volumes, structuring data for downstream consumers.

- Market coverage: Parallel indexing across chains and DEXs provides extensive visibility without impacting latency or data consistency.

%201.webp)